The Risks that Poor Environmental Conditions Pose to Your IT Hardware

Recently, media outlets covered an ex-Platte River Networks employee discussing their former employer. According to the ex-employee, Platte River, for a short time, maintained Hillary Clinton’s private email server in “server racks in the bathroom” at their office. This was during the time Mrs. Clinton served as U.S. Secretary of State. Platte River Networks denied this days later.

Hillary's Email Server was run out of an old bathroom closet - New York Post

Platte River Networks: Clinton e-mail server was never in Denver – Denver Post

Cybersecurity firm hired by Hillary Clinton: 'We would never have taken it on' if we knew of the ensuing chaos – Business Insider

True or not, the idea of our Secretary of State’s classified email sitting on a server in a bathroom provides IT pros with some comic relief, but it also begs the question: What conditions do servers need for optimal security and performance?

Servers and network gear are built to run under certain environmental conditions. Factors like temperature, airflow, humidity, power, positioning, and cabling all come into play.

Bad Ideas: Examples of Poor Environmental Conditions for Servers and Network Gear

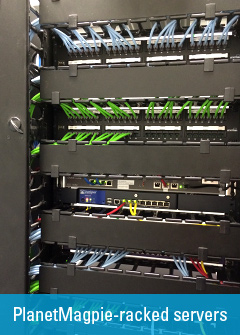

Through the years PlanetMagpie’s consultants have encountered servers and network gear in a wide range of environmental conditions. When the conditions are good, IT hardware typically performs with minimal issues. When conditions are bad, our Support phone rings a lot!

Past examples:

- “Server Hay Bale”. Servers haphazardly stuffed in a closet, with wires that look like spaghetti.

We thought we had seen it all until we were called in for a recovery at a company with a billion dollar market cap. Over 20 servers stacked on a folding table. That’s quite a bit of weight—especially when they are stacked on top of each other in the middle of the table. Needless to say, the particle board table just couldn’t stand the strain and they all ended up in a heap on the floor. Network interfaces tore out of the servers, chassis dented, hard drives damaged.

Risk: Overheating, Dust, Falling Objects, Difficult for Disaster Recovery Work

Takeaway: Usually when you treat server resources like that you aren’t real good about backups either. End result – critical services were back in two days, but other services took over two weeks to restore and the company did suffer some data loss. Also, not great for their Sarbanes – Oxley report that year.

- Halogen lights pointing directly at network cables, and the cable casings melted. We have seen work lights zip-tied to the back of 2 post racks. Those lights can melt a CAT6 cable or power cord in minutes.

Risk: Heat

Takeaway: Keep the Fire Department’s number handy and your resume up to date.

- Server placed in a small closet with venting in a metal fabrication company. The venting provided a great way for airborne metal particles produced during fabrication to drift into the server closet. The particles became magnetized after the turning, which added another wrinkle to this story. The result? Repeated hard drive failures.

Risk: Particles (Metal)

Takeaway: Expect to replace hard drives every 30 to 60 days and make sure you have a great offsite backup.

- Server on the floor, resting on carpet. This one isn’t so bad if you don’t have a cleaning service. It applies to desktops and servers alike. Here’s what happens – the cleaning crew comes in and vacuums the carpets. The carpet fibers are lifted up in the process. The fans in the servers and desktops suck them in. We have seen CPU fans so packed with carpet fiber they can no longer rotate. We get the call when the server or desktop overheats.

Risk: Static Electricity, Falling Objects, Spilled Beverages, Dust

Takeaway: For desktops—buy small form factor computers you can place behind your monitor on your desk. For servers – they need a location without carpet. Racking is the best option.

- Room with poor ventilation (or none). Servers, especially the Dell variety, create quite a bit of heat. We have seen a real, honest-to-goodness server room with raised floors hit over 90 degrees in less than 1 hour. Sure, they have 20 or 30 servers, but even one server in a tiny closet can overheat the space in minutes.

Risk: Heat, Dust, Moisture

Takeaway: Yes, a bathroom does mean you can turn the vent fan on, but all it takes is one toilet mishap. Servers are hydrophobic.

- Open windows, doors, or air ducts leading into the room. Dust and smoke are a disk drive’s worst enemy. A dust particle is about 10 times bigger than the space between the heads and the disk. One gets in...and the scratches start adding up on your precious disks.

Risk: Dust

Takeaway: A lesson we learned a long time ago from IBM – never shut down computers or servers for a long duration. 99% of disk issues come when the platters relax and you restart the server or computer. This is especially true in a warm environment. The heads sag and scrape on the disk causing damage—usually irreparable.

- Server right next to an AC unit with ice on it. Result—a complete Exchange Server failure. To restore we looked at one backup tape … and another, and another. All were useless. Static had destroyed the veracity of the tapes. We finally built up a new server, exported all the OST files to PST, and imported them into the new Exchange Server. Down time, 2 days. But it took two weeks to restore all the data from the PST files.

Risk: Moisture, Static Electricity

Takeaway: Air conditioners are not supposed to run at 60 degrees nonstop. As a matter of fact, unless you have humidity controlling units, they will cause lots of damage by creating static electricity.

- Network gear installed in a ceiling crawl space. We have seen this more times than we care to remember. You go onsite and ask where the network equipment is—and they just point at the ceiling! Those spaces get hot, and typically on real hot days the network will start dropping. Also, if you have home grade equipment like Netgear or Cisco’s LinkSys repurposed gear, you have to grab a ladder at least once a week and reboot the gear.

Risk: Overheating

Takeaway: Keep your network gear in a protected accessible location. Always buy business-grade equipment for business purposes. You will pay more in the long run if you cheap out on your gear.

The Environmental Risks Threatening Your IT

Each of the “Risks” above represents a potential for damage to the server. When setting up a server or network gear, you must consider all these Risks.

Let’s go into more detail on what kind of damage the Risks represent.

- Heat: When too hot, electronic components inside a server (like the CPU) can melt. Or worse, start a fire.

- Cold: A server can become too cold. If the environment is too cold, it can build up static electricity in the air. You touch the server with extra static in the air—ZAP!

- Static Electricity: Walking on a carpet and then touching a server? You’ve just generated enough static electricity to destroy its motherboard.

- Particles: Tiny particles in the air (e.g., metal shavings) get sucked into the server’s fans, causing a clog or damaging internal components.

- Falling Objects: Objects falling on a server – or worse, the whole server falling! – means dents in the case, cracked components…and high chance of total server failure.

- Dust: We’ve all seen how dust cakes onto a fan over time. When dust cakes inside a server, it can clog the fans keeping it cool. Then you have a “Heat” problem.

- Moisture: Getting water into any computer is bad—instant short-circuit.

- Access: Who has open access to the servers? Limit access only to people who need it. Not only is this a security issue, but too many people with access means higher risk for all the above.

Ideal Server Conditions

Now that we’ve seen what not to do, let’s look at what we should do. Here’s our best advice for companies who want to get the longest life out of their network hardware.

- Server Racks positioned on a raised floor. Raised floors allow for keeping the cabling and air flow beneath the server racks. This is safer and more efficient for cooling the racks. Plus, it protects the servers in the case of (non-excessive) flooding.

- Cables organized & kept off the floor, to prevent tripping.

- Temperature-controlled environment. 72–74 degrees is optimal if you do not have equipment to manage humidity. Install a temperature monitor for temperature change alerts.

- Redundant power to protect the servers in the event of a power outage. The older the server, the less it likes unexpected shutdowns. It may not reboot.

- Security to get into the server room. Limit access not only for security, but to prevent too much dust getting in from the door opening & closing.

- Proper ventilation to keep particles out of the air.

If you don’t have a good on-site location for your servers, you have other options. Consider collocation, or cloud hosting. This places your servers within an environment purposely designed to maximize server life.

(You can always verify this yourself. Ask the cloud host or collocation provider for a report on their environmental conditions.)

The next time you need to work on a server, evaluate its environment against this list. It might be at risk.

PlanetMagpie is happy to help optimize server conditions (we have a lot of practice!). Please contact us if you’re not sure about the condition of yours.